The following program uses the basic Newton's method to find the minimum of a function .

The program prompts you to enter for each variable (i.e. dimension):

1. The maximum number of iterations.

2. The initial guesses for the optimum point for each variable.

3. The tolerance values for each variable.

The program displays intermediate values for the function and the variables. The program displays the following final results:

1. The coordinates of the minimum point.

2. The minimum function value.

3. The number of iterations

The current code finds the minimum for the following function:

f(x1,x2) = x1 - x2 + 2 * x1 ^ 2 + 2 * x1 * x2 + x2 ^ 2

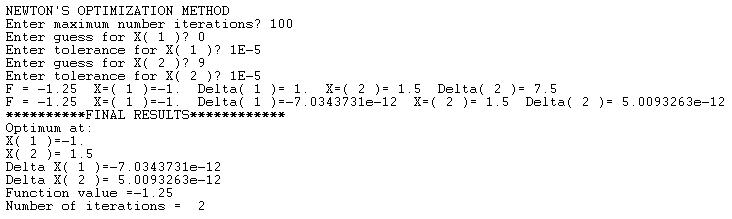

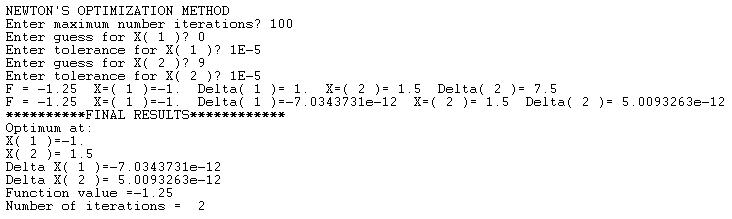

Here is a sample session to solve for the optimum of the above function:

Here is the BASIC listing:

! Basic Newton's Method for Optimization

OPTION TYPO

OPTION NOLET

DECLARE NUMERIC MAX_VARS

DECLARE NUMERIC N, I, F

DECLARE NUMERIC EPSF, fNorm

DECLARE NUMERIC Iter, MaxIter

DECLARE NUMERIC bStop, bTrue, bFalse

Dim X(1), g(1,1)

Dim Toler(1)

Dim DeltaX(1), J(1, 1)

Dim Index(1)

MAX_VARS = 2

bTrue = 1

bFalse = 0

SUB MyFx(X(), N, Res)

! Res = 100 * (X(1) ^ 2 - X(2)) ^ 2 + (1 - X(1)) ^ 2

Res = X(1) - X(2) + 2 * X(1) ^ 2 + 2 * X(1) * X(2) + X(2) ^ 2

END SUB

SUB FirstDeriv(N, X(), iVar, funRes)

LOCAL Xt, h, Fp, Fm

Xt = X(iVar)

h = 0.01 * (1 + Abs(Xt))

X(iVar) = Xt + h

CALL MyFx(X, N, Fp)

X(iVar) = Xt - h

CALL MyFx(X, N, Fm)

X(iVar) = Xt

funRes = (Fp - Fm) / 2 / h

END SUB

SUB SecondDeriv(N, X(), iVar, jVar, funRes)

LOCAL Xt, Yt,HX, HY,F0, Fp, Fm

LOCAL Fpp, Fmm, Fpm, Fmp

! calculate second derivative?

If iVar = jVar Then

CALL MyFx(X, N, F0)

Xt = X(iVar)

HX = 0.01 * (1 + Abs(Xt))

X(iVar) = Xt + HX

CALL MyFx(X, N, Fp)

X(iVar) = Xt - HX

CALL MyFx(X, N, Fm)

X(iVar) = Xt

funRes = (Fp - 2 * F0 + Fm) / HX ^ 2

Else

Xt = X(iVar)

Yt = X(jVar)

HX = 0.01 * (1 + Abs(Xt))

HY = 0.01 * (1 + Abs(Yt))

! calculate Fpp

X(iVar) = Xt + HX

X(jVar) = Yt + HY

CALL MyFx(X, N, Fpp)

! calculate Fmm

X(iVar) = Xt - HX

X(jVar) = Yt - HY

CALL MyFx(X, N, Fmm)

! calculate Fpm

X(iVar) = Xt + HX

X(jVar) = Yt - HY

CALL MyFx(X, N, Fpm)

! calculate Fmp

X(iVar) = Xt - HX

X(jVar) = Yt + HY

CALL MyFx(X, N, Fmp)

X(iVar) = Xt

X(jVar) = Yt

funRes = (Fpp - Fmp - Fpm + Fmm) / (4 * HX * HY)

End If

END SUB

SUB GetFirstDerives(N, X(), FirstDerivX(,))

LOCAL I

For I = 1 To N

CALL FirstDeriv(N, X, I, FirstDerivX(I,1))

Next I

END SUB

SUB GetSecondDerives(N, X(), SecondDerivX(,))

LOCAL I, J

For I = 1 To N

For J = 1 To N

CALL SecondDeriv(N, X, I, J, SecondDerivX(I, J))

Next J

Next I

END SUB

! Basic Newton's Method for Optimization

N = MAX_VARS

MAT REDIM X(N), g(N,1)

MAT REDIM Toler(N)

MAT REDIM DeltaX(N), J(N, N)

MAT REDIM Index(N)

PRINT "NEWTON'S OPTIMIZATION METHOD"

! INPUT PROMPT "Enter function tolerance value? ": epsf

INPUT PROMPT "Enter maximum number iterations? ": MaxIter

For I = 1 To N

PRINT "Enter guess for X(";I;")";

INPUT X(I)

PRINT "Enter tolerance for X(";I;")";

INPUT Toler(I)

Next I

Iter = 0

Do

Iter = Iter + 1

If Iter > MaxIter Then

PRINT "Reached maximum iterations limit"

Exit Do

End If

CALL GetFirstDerives(N, X, g)

! test if gradient is shallow enough

fNorm = 0

For I = 1 To N

fNorm = fNorm + g(I,1)^2

Next I

fNorm = Sqr(fNorm)

! If fNorm < EPSF Then Exit Do

CALL GetSecondDerives(N, X, J)

MAT J = INV(J)

MAT g = J * g

For I = 1 To N

DeltaX(I) = g(I,1)

X(I) = X(I) - DeltaX(I)

Next I

bStop = bTrue

For I = 1 To N

If Abs(DeltaX(I)) > Toler(I) Then

bStop = bFalse

Exit For

End If

Next I

CALL MyFx(X, N, F)

PRINT "F = ";F;" ";

For I = 1 To N

PRINT "X=(";I;")=";X(I);" Delta(";I;")=";DeltaX(I);" ";

Next I

PRINT

Loop Until bStop = bTrue

CALL MyFx(X, N, F)

PRINT "**********FINAL RESULTS************"

PRINT "Optimum at:"

For I = 1 To N

PRINT "X(";I;")=";X(I)

Next I

For I = 1 To N

PRINT "Delta X(";I;")=";DeltaX(I)

Next I

PRINT "Function value ="; F

PRINT "Number of iterations = ";Iter

END

Copyright (c) Namir Shammas. All rights reserved.