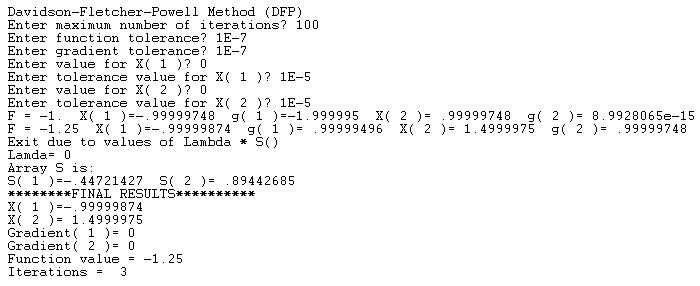

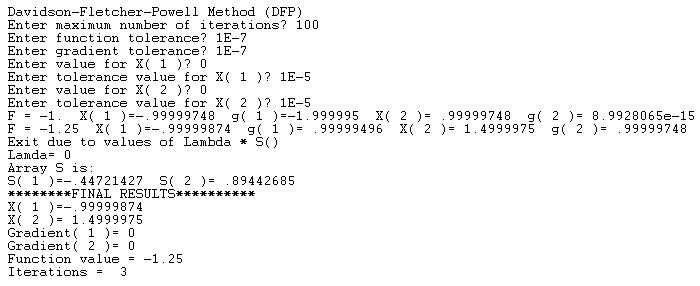

The following program uses the Davidson-Fletcher-Powell (DFP) method to find the minimum of a function. This method is a quasi-Newton method. That is, the DFP algorithm is based on Newton's method but performs different calculations to update the guess refinements.

The program prompts you to enter for each variable (i.e. dimension):

1. The maximum number of iterations.

2. The tolerance for the minimized function,

3. The tolerance for the gradient.

4. The initial guesses for the optimum point for each variable.

5. The tolerance for the guess refinement for each variable.

The program displays intermediate values for the function and the variables. The program displays the following final results:

1. The coordinates of the minimum point.

2. The minimum function value.

3. The number of iterations

The current code finds the minimum for the following function:

f(x1,x2) = x1 - x2 + 2 * x1 ^ 2 + 2 * x1 * x2 + x2 ^ 2

Here is a sample session to solve for the optimum of the above function:

Here is the BASIC listing:

! Davidson-Fletcher-Powell Method (DFP)

OPTION TYPO

OPTION NOLET

DECLARE NUMERIC MAX_VARS

DECLARE NUMERIC N, I, EPSF, EPSG, fNorm, Lambda

DECLARE NUMERIC Iter, MaxIter, F

DECLARE NUMERIC bStop, bOK, boolRes, bTrue, bFalse

Dim X(1), grad1(1,1), grad2(1,1), g(1,1), S(1,1)

Dim d(1,1), Bmat(1,1), Mmat(1,1), Nmat(1,1)

Dim MM1(1,1), MM2(1,1), MM3(1,1), MM4(1,1)

Dim VV1(1,1), Toler(1)

bTrue = 1

bFalse = 0

SUB CalcNorm(X(,), N, FNorm)

LOCAL I

FNorm = 0

For I = 1 To N

FNorm = FNorm + X(I,1)^2

Next I

FNorm = Sqr(FNorm)

END SUB

SUB MyFx(X(), N, Res)

! Res = 100 * (X(1) ^ 2 - X(2)) ^ 2 + (1 - X(1)) ^ 2

Res = X(1) - X(2) + 2 * X(1) ^ 2 + 2 * X(1) * X(2) + X(2) ^ 2

End SUB

SUB FirstDeriv(N, X(), iVar, funRes)

LOCAL Xt, h, Fp, Fm

Xt = X(iVar)

h = 0.01 * (1 + Abs(Xt))

X(iVar) = Xt + h

CALL MyFx(X, N, Fp)

X(iVar) = Xt - h

CALL MyFx(X, N, Fm)

X(iVar) = Xt

funRes = (Fp - Fm) / 2 / h

End SUB

Sub GetFirstDerives(N, X(), FirstDerivX(,))

LOCAL I

For I = 1 To N

CALL FirstDeriv(N, X, I, FirstDerivX(I,1))

Next I

End Sub

SUB MyFxEx(N, X(), DeltaX(,), Lambda, funRes)

LOCAL I, XX(1)

!Dim XX()

MAT REDIM XX(N)

For I = 1 To N

XX(I) = X(I) + Lambda * DeltaX(I,1)

Next I

CALL MyFx(XX, N, funRes)

End SUB

SUB LinSearch_DirectSearch(X(), N, Lambda, DeltaX(,), InitStep, MinStep, boolRes)

LOCAL F1, F2

CALL MyFxEx(N, X, DeltaX, Lambda, F1)

Do

CALL MyFxEx(N, X, DeltaX, Lambda + InitStep, F2)

If F2 < F1 Then

F1 = F2

Lambda = Lambda + InitStep

Else

CALL MyFxEx(N, X, DeltaX, Lambda - InitStep, F2)

If F2 < F1 Then

F1 = F2

Lambda = Lambda - InitStep

Else

! reduce search step size

InitStep = InitStep / 10

End If

End If

Loop Until InitStep < MinStep

boolRes = bTrue

End SUB

! Davidson-Fletcher-Powell Method (DFP)

MAX_VARS = 2

N = MAX_VARS

MAT REDIM Toler(MAX_VARS)

MAT REDIM X(MAX_VARS)

MAT REDIM grad1(MAX_VARS,1)

MAT REDIM grad2(MAX_VARS,1)

MAT REDIM g(MAX_VARS,1)

MAT REDIM S(MAX_VARS,1)

MAT REDIM d(MAX_VARS,1)

MAT REDIM Bmat(MAX_VARS, MAX_VARS)

MAT REDIM Mmat(MAX_VARS, MAX_VARS)

MAT REDIM Nmat(MAX_VARS, MAX_VARS)

MAT REDIM MM1(MAX_VARS, 1)

MAT REDIM MM2(MAX_VARS, MAX_VARS)

MAT REDIM MM3(MAX_VARS, MAX_VARS)

MAT REDIM MM4(MAX_VARS, MAX_VARS)

MAT REDIM VV1(MAX_VARS,1)

PRINT "Davidson-Fletcher-Powell Method (DFP)"

INPUT PROMPT "Enter maximum number of iterations? ": MaxIter

INPUT PROMPT "Enter function tolerance? ": EPSF

INPUT PROMPT "Enter gradient tolerance? ": EPSG

N = MAX_VARS

For I = 1 To N

PRINT "Enter value for X(";I;")";

INPUT X(I)

PRINT "Enter tolerance value for X(";I;")";

INPUT Toler(I)

Next I

! set matrix B as an indentity matrix

MAT Bmat = IDN(MAX_VARS)

Iter = 0

! calculate initial gradient

CALL GetFirstDerives(N, X, grad1)

! start main loop

Do

Iter = Iter + 1

If Iter > MaxIter Then

PRINT "Reached iteration limits"

Exit Do

End If

! calcuate vector S() and reverse its sign

MAT S = Bmat * grad1

MAT S = (-1) * S

! normailize vector S

CALL CalcNorm(S, N, fNorm)

MAT S = (1/fNorm) * S ! objML.NormalizeVect S

Lambda = 0.1

CALL LinSearch_DirectSearch(X, N, Lambda, S, 0.1, 0.00001, boolRes)

! calculate optimum X() with the given Lambda

MAT d = (Lambda) * S

For I = 1 To N

X(I) = X(I) + d(I,1)

Next I

! get new gradient

CALL GetFirstDerives(N, X, grad2)

! calculate vector g() and shift grad2() into grad1()

MAT g = grad2 - grad1

MAT grad1 = grad2

! test for convergence

bStop = bTrue

For I = 1 To N

If Abs(d(I,1)) > Toler(I) Then

bStop = bFalse

Exit For

End If

Next I

If bStop = bTrue Then

PRINT "Exit due to values of Lambda * S()"

PRINT "Lamda="; Lambda

PRINT "Array S is:"

For I = 1 To N

PRINT "S(";I;")=";S(I,1);" ";

Next I

PRINT

Exit Do

End If

! exit if gradient is low

CALL CalcNorm(g, N, fNorm)

If fNorm < EPSG Then

PRINT "Exit due to G Norm"

PRINT "Norm=";fNorm

Exit Do

End If

!-------------------------------------------------

! Start elaborate process to update matrix B

!

! First calculate matrix M (stored as Mmat)

! MM1 = S in matrix form

MAT MM1 = S

! MM2 = S^T in matrix form

MAT MM2 = TRN(S)

! MM3 = g in matrix form

MAT MM3 = g

! Mmat = S * S^T

MAT Mmat = MM1 * MM2

! MM4 = S^T * g

MAT MM4 = MM2 * MM3

! calculate natrix M

MAT Mmat = (Lambda / MM4(1,1)) * Mmat

! Calculate matrix N (stored as Nmat)

! VV1 = {B] g

MAT VV1 = Bmat * g

! MM1 = VV1 in column matrix form ([B] g)

MAT MM1 = VV1

! MM2 = VV1 in Iter matrix form ([B] g)^T

MAT MM2 = TRN(VV1)

! Nmat = ([B] g) * ([B] g)^T

MAT Nmat = MM1 * MM2

! MM1 = g^T

MAT MM1 = TRN(g)

! MM2 = g

MAT MM2 = g

! MM3 = g^T [B]

MAT MM3 = MM1 * Bmat

! MM4 = g^T [B] g

MAT MM4 = MM3 * MM2

! calculate matrix N

MAT Nmat = (-1/MM4(1,1)) * Nmat

! B = B + M

MAT Bmat = Bmat + Mmat

! B = B + N

MAT Bmat = Bmat + Nmat

CALL MyFx(X, N, F)

PRINT "F = ";F;" ";

For I = 1 To N

PRINT "X(";I;")=";X(I);" ";

PRINT "g(";I;")=";g(I,1);" ";

Next I

PRINT

Loop

PRINT "********FINAL RESULTS**********"

For I = 1 To N

PRINT "X(";I;")=";X(I)

Next I

For I = 1 To N

PRINT "Gradient(";I;")=";g(I,1)

Next I

CALL MyFx(X, N, F)

PRINT "Function value = ";F

PRINT "Iterations = ";Iter

END

Copyright (c) Namir Shammas. All rights reserved.